About me

Welcome to my personal homepage! I am Chuwei Wang, a Ph.D. student from the Department of Computing and Mathematical Sciences (CMS), Caltech. I am fortunate to be advised by Professor Anima Anandkumar and Andrew Stuart. Prior to joining Caltech in 2023, I obtained my B.S. degree in computational mathematics from the School of Mathematical Sciences (SMS), Peking University, where I was advised by Professor Liwei Wang and Di He.

My research goal is to develop practical and powerful machine learning tools for scientific problems through theory, algorithm design, and high performance computing. I am also fascinated with infinite-dimensional objects.

Specifically, I am currently interested in:

- Operator learning.

- Application of deep learning in physical sciences.

- Closure modeling (a.k.a. coarse graining, reduce order modeling, etc).

- Sampling and optimal transport.

I love working with collaborators from various scientific backgrounds. If you are interested in my research, please feel free to contact me!

News

- Feb. 2025 Give a talk at the Alan Turing Institute.

- Nov. 2024 Awarded as Top Reviewer of NeurIPS 2024.

- Aug. 2024 Give a talk on data-driven closure models at IAIFI summer workshop.

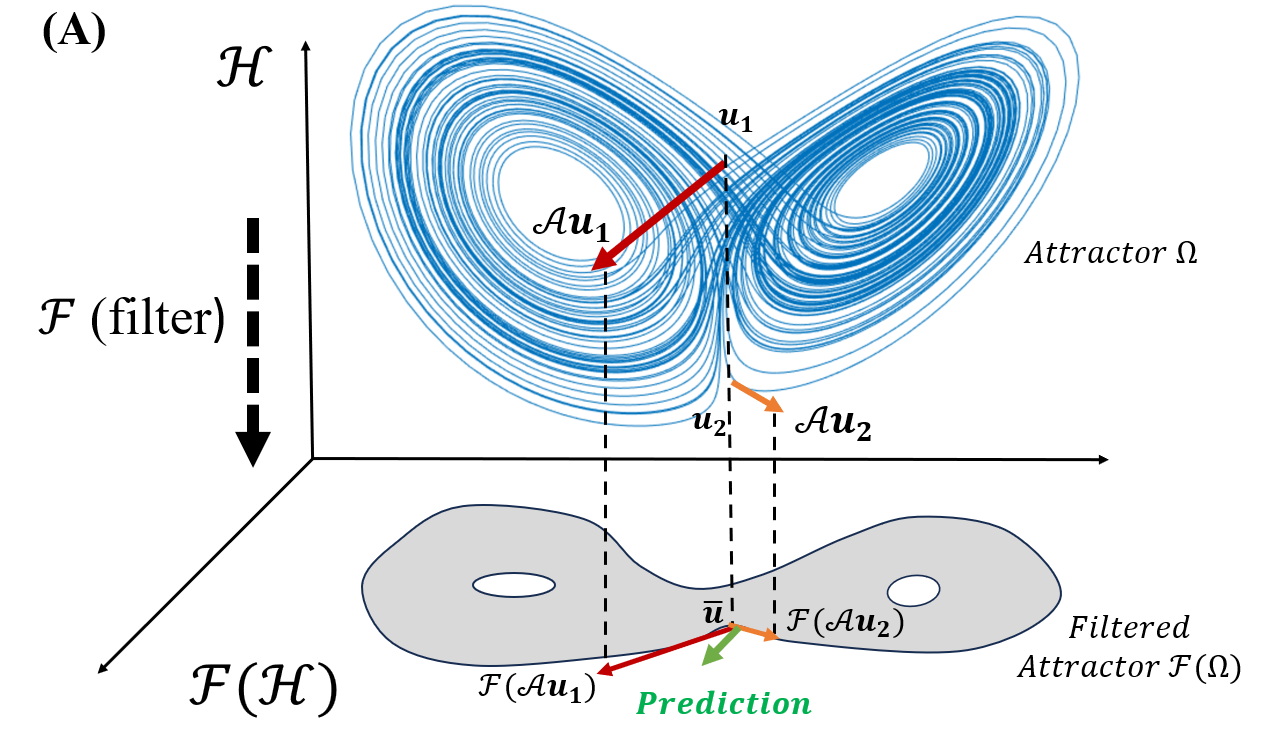

- Aug. 2024 Our new work on closure modeling is released on arxiv. We reveal the fundamental shortcomings of existing learning-based closure models and propose a new learning framework, achieving both tremendous speedup and higher accuracy.

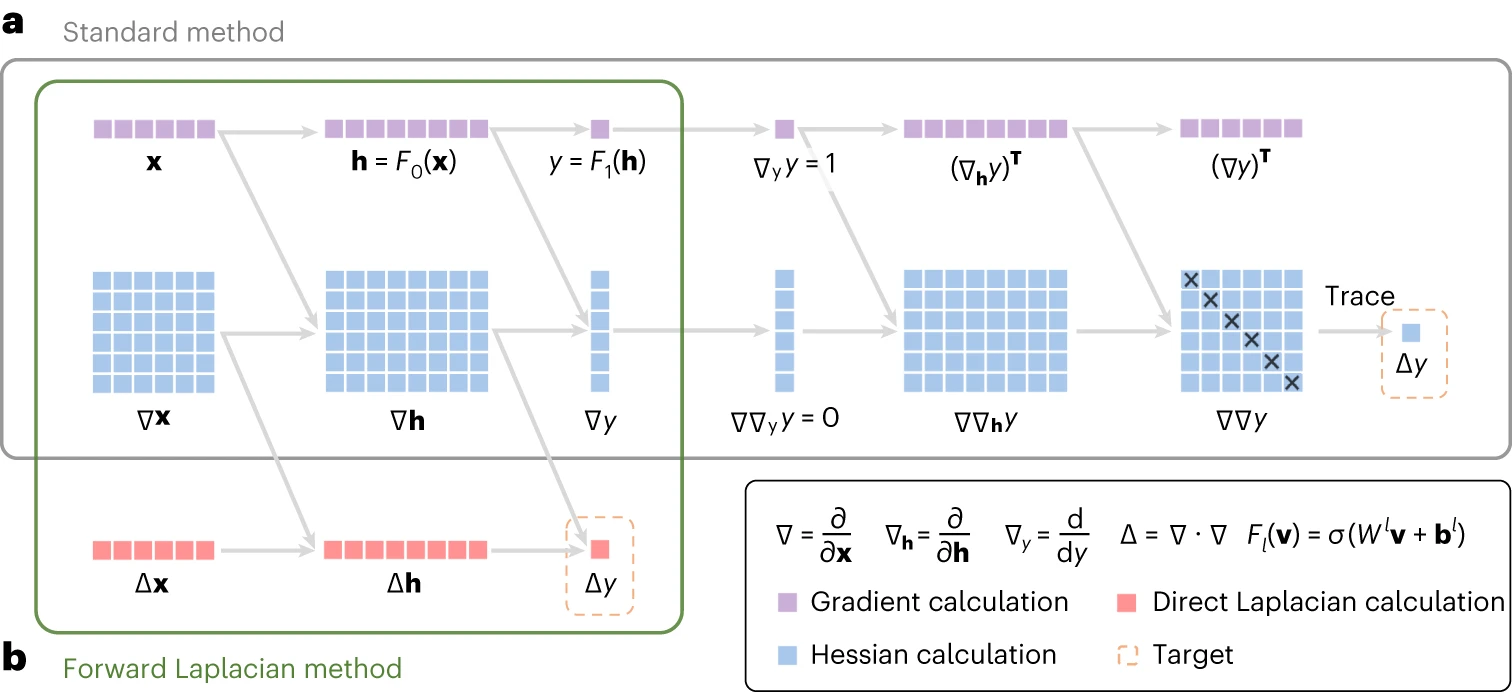

- May 2024 We extend Forward Laplacian to accomodate general high-order differential operators. The paper appears at ICLR 2024 workshop on AI4DifferentialEquations.

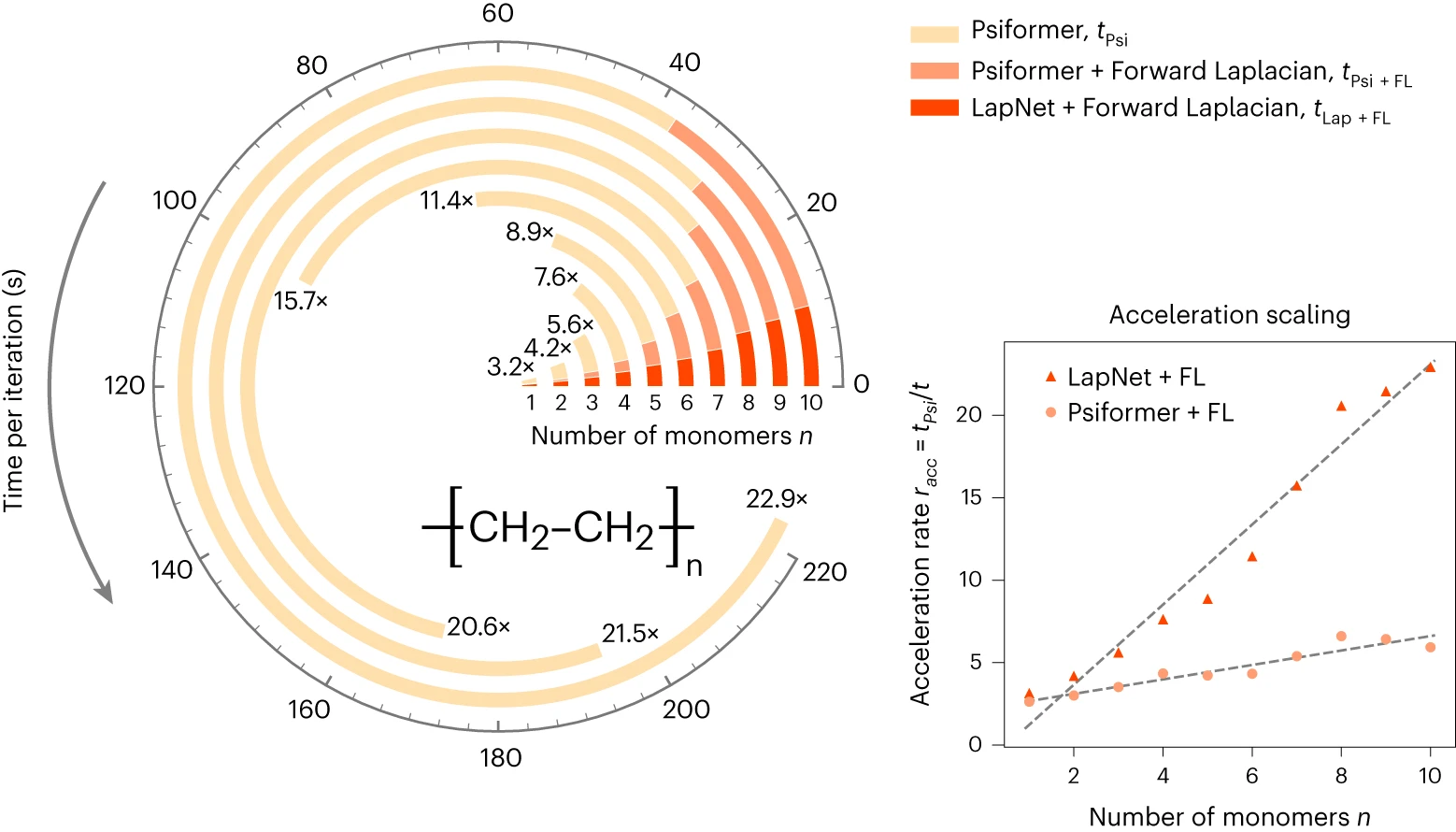

- Feb. 2024 Our Forward Laplacian paper is accepted by Nature Machine Intelligence. We achieves more than 20x speedup on ground-state energy computing (QMC) for qunatum systems with \(\sim10^2\) electrons.

Selected Recent Works

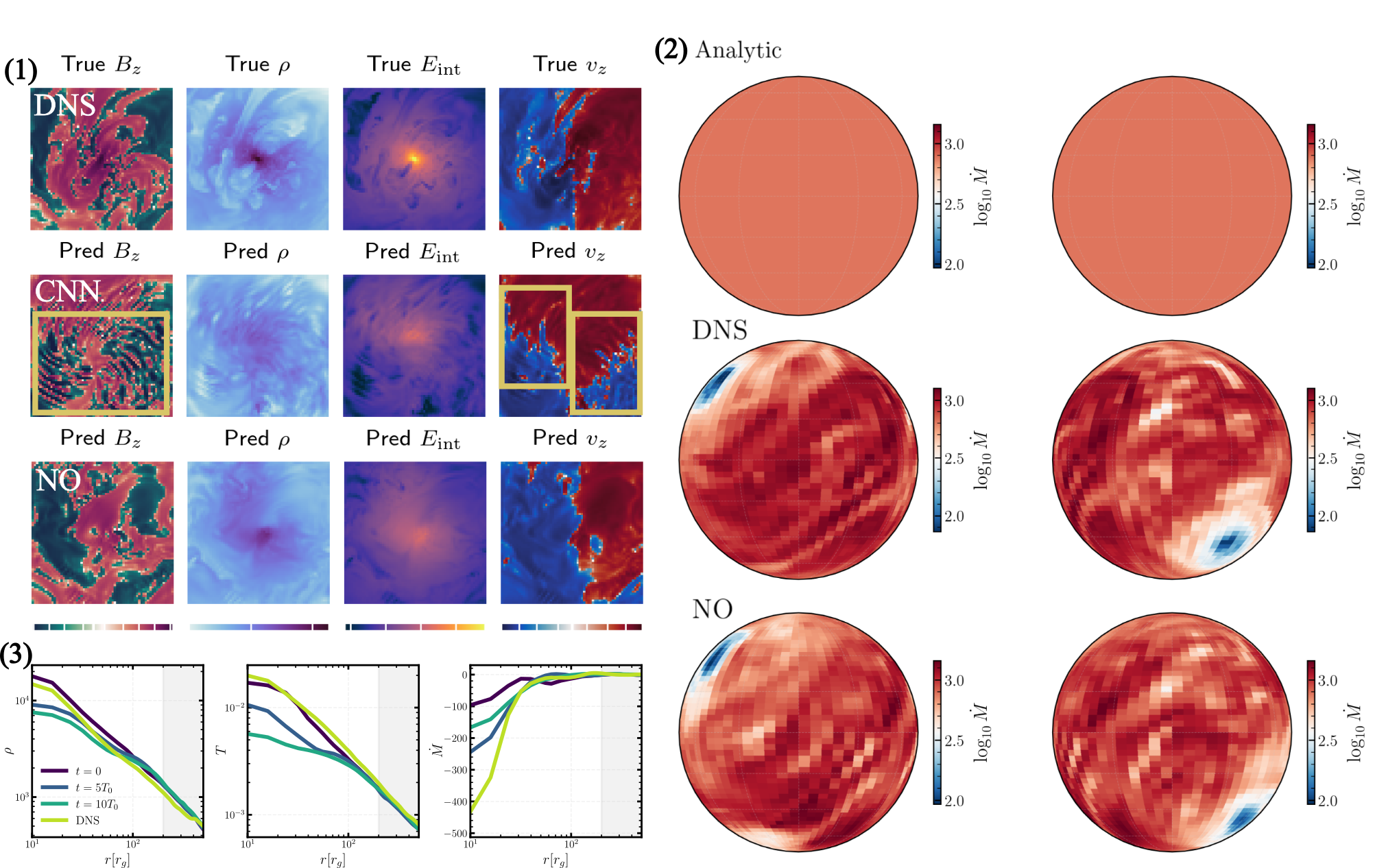

From Black Hole to Galaxy: Neural Operator Framework for Accretion and Feedback Dynamics

Nihaal Bhojwani*, Chuwei Wang*, Hai-Yang Wang*, Chang Sun, Elias Most, Anima AnandkumarNeurIPS 2025 workshop on ML for Physical Sciences

Beyond Closure Models: Learning Chaotic-Systems via Physics-Informed Neural Operators

Chuwei Wang, Julius Berner, Zongyi Li, Di Zhou, Jiayun Wang, Jane Bae, Anima AnandkumarPreprint

DOF: Accelerating High-order Differential Operators with Forward Propagation

Ruichen Li*, Chuwei Wang*, Haotian Ye*, Di He, Liwei WangICLR 2024 workshop on AI4DifferentialEquations

A computational framework for neural network-based variational Monte Carlo with Forward Laplacian

Ruichen Li*, Haotian Ye*, Du Jiang, Xuelan Wen, Chuwei Wang, Zhe Li, Xiang Li, Di He, Ji Chen, Weiluo Ren, Liwei WangNature Machine Intelligence 2024

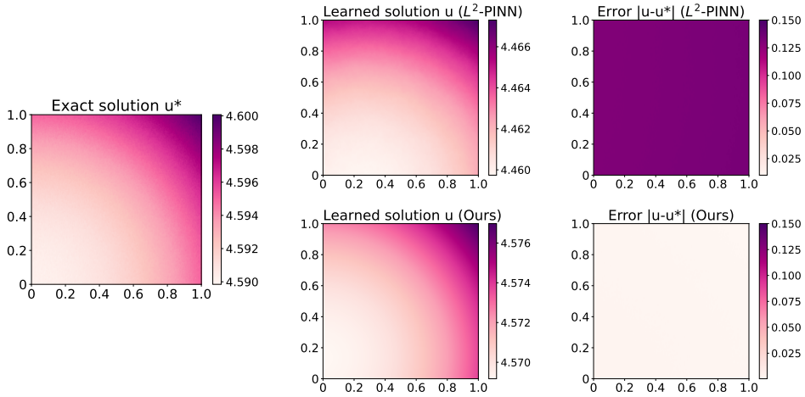

Is L^2 Physics-Informed Loss Always Suitable for Training Physics-Informed Neural Network?

Chuwei Wang*, Shanda Li*, Di He, Liwei WangNeurIPS 2022

Services

- Reviewer of JMLR, Nature Machine Intelligence (NMI), NeurIPS, ICLR, ICML.

- Area Chair of AI+Science Workshop, NeurIPS 2025.

- Organizing Committee member of Keller Colloquium.

Selected Awards

- Nov. 2024 Top Reviewer of NeurIPS 2024.

- Jun. 2023 Peking University Excellent Graduate.

- Aug. 2022 Bronze Medal (ranked No. 6 in total), S.T. Yau College Student Mathematics Competition, analysis and partial differential equations individual.

- May 2021 Elite undergraduate training program of Applied Mathematics and Statistics.

- Dec. 2020 First Prize, Chinese National College Student Physics Competition

- Dec. 2020 First Prize (ranked No. 14 in total), Chinese National College Student Mathematics Competition